Sgd machine learning top

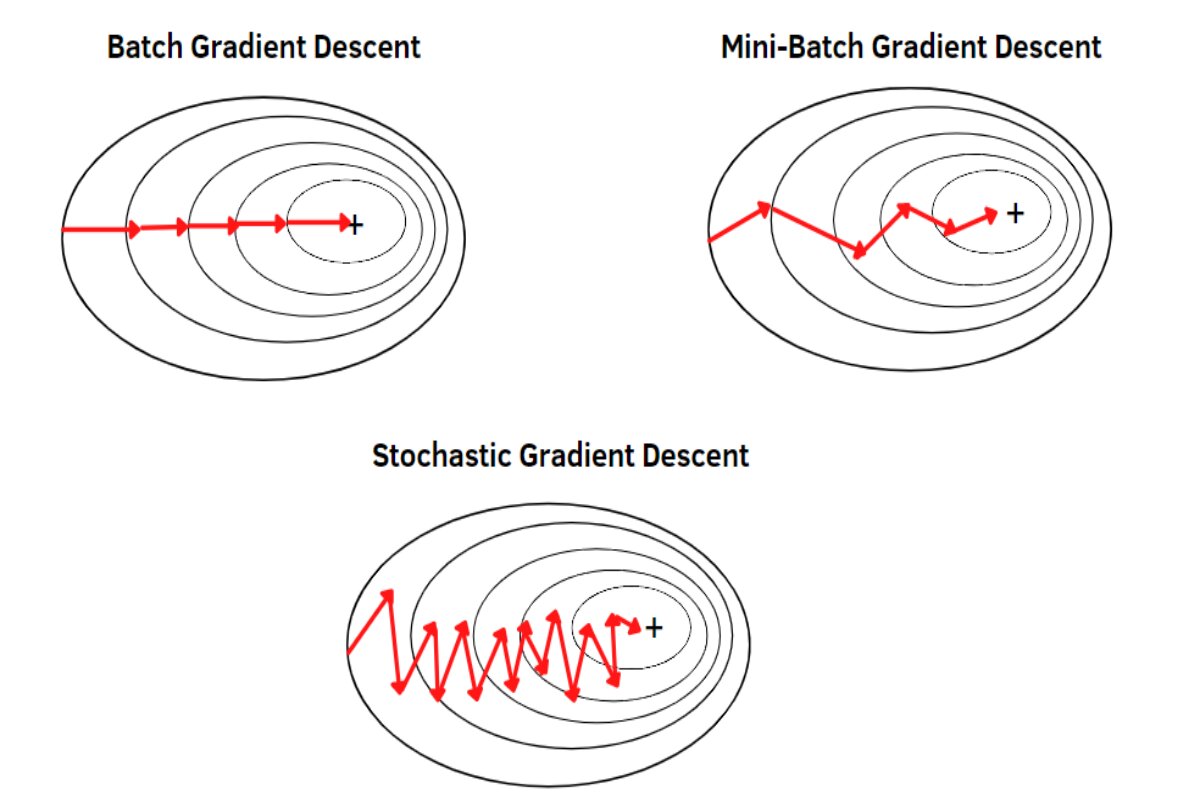

Sgd machine learning top, EfficientDL Mini batch Gradient Descent Explained StatusNeo top

$70.00

SAVE 50% OFF

$35.00

$0 today, followed by 3 monthly payments of $11.67, interest free. Read More

Sgd machine learning top

EfficientDL Mini batch Gradient Descent Explained StatusNeo

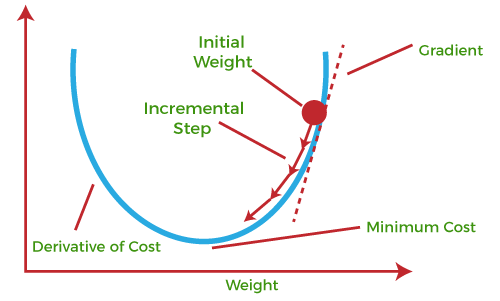

Gradient Descent in Machine Learning Javatpoint

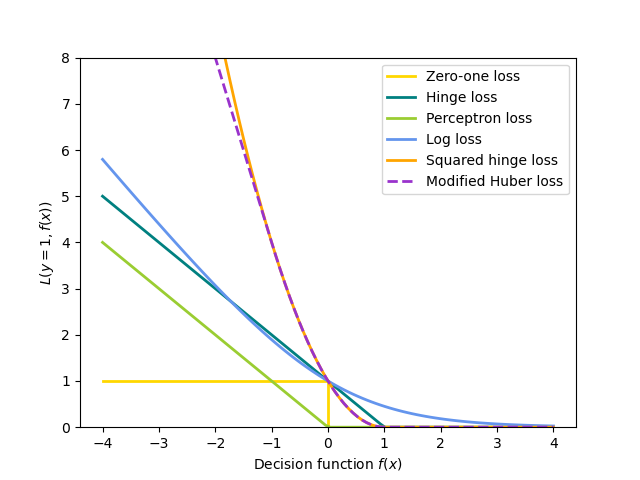

1.5. Stochastic Gradient Descent scikit learn 1.4.1 documentation

Which Optimizer should I use for my ML Project

12.4. Stochastic Gradient Descent Dive into Deep Learning 1.0.3

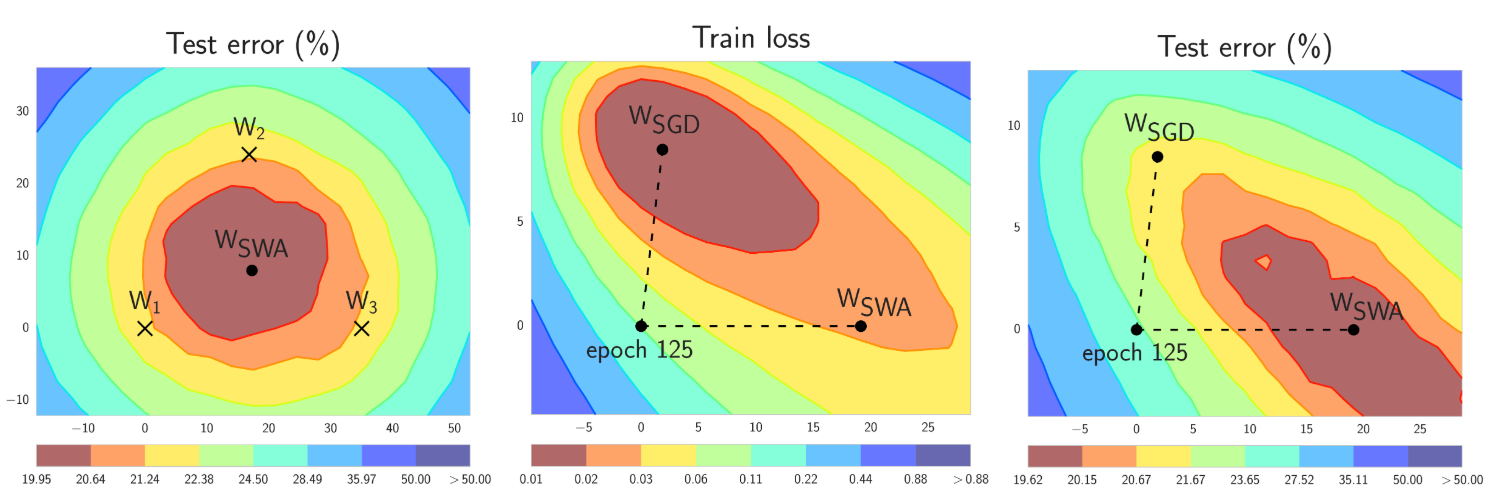

Stochastic Weight Averaging in PyTorch PyTorch

Description

Product code: Sgd machine learning top

SGD Explained Papers With Code top, Implementing SGD From Scratch. Custom Implementation of Stochastic top, A Complete Guide to Stochastic Gradient Descent SGD top, Assessing Generalization of SGD via Disagreement Machine top, Stochastic Gradient Descent on your microcontroller top, ML Stochastic Gradient Descent SGD GeeksforGeeks top, Introduction to SGD Classifier Michael Fuchs Python top, SGD with Momentum Explained Papers With Code top, Learning Optimizers in Deep Learning by Abhishek Shah Medium top, Stochastic gradient descent SGD Statistics for Machine top, The Stochastic Gradient Descent SGD Learning Rate A.I. Shelf top, Stochastic gradient descent Wikipedia top, Gradient Descent and its Types Analytics Vidhya top, Stochastic Gradient Descent In ML Explained How To Implement top, Variance Reduction Methods top, An overview of gradient descent optimization algorithms top, Gradient Descent vs Stochastic GD vs Mini Batch SGD by Ethan top, Stochastic Gradient Descent. Download Scientific Diagram top, Gradient Based Optimizers in Deep Learning Analytics Vidhya top, Stochastic Gradient Descent Clearly Explained top, EfficientDL Mini batch Gradient Descent Explained StatusNeo top, Gradient Descent in Machine Learning Javatpoint top, 1.5. Stochastic Gradient Descent scikit learn 1.4.1 documentation top, Which Optimizer should I use for my ML Project top, 12.4. Stochastic Gradient Descent Dive into Deep Learning 1.0.3 top, Stochastic Weight Averaging in PyTorch PyTorch top, Gradient Descent GD and Stochastic Gradient Descent SGD by top, A Complete Guide to Stochastic Gradient Descent SGD top, ICLR 2019 Fast as Adam Good as SGD New Optimizer Has Both top, deep learning Does Minibatch reduce drawback of SGD Data top, Deep Learning with Differential Privacy DP SGD Explained top, Stochastic Gradient Descent Classifier Machine Learning 2 top, Analysis of preconditioned stochastic gradient descent with non top, SGD with Momentum Explained Papers With Code top, Deep Learning Optimizers. SGD with momentum Adagrad Adadelta top.

SGD Explained Papers With Code top, Implementing SGD From Scratch. Custom Implementation of Stochastic top, A Complete Guide to Stochastic Gradient Descent SGD top, Assessing Generalization of SGD via Disagreement Machine top, Stochastic Gradient Descent on your microcontroller top, ML Stochastic Gradient Descent SGD GeeksforGeeks top, Introduction to SGD Classifier Michael Fuchs Python top, SGD with Momentum Explained Papers With Code top, Learning Optimizers in Deep Learning by Abhishek Shah Medium top, Stochastic gradient descent SGD Statistics for Machine top, The Stochastic Gradient Descent SGD Learning Rate A.I. Shelf top, Stochastic gradient descent Wikipedia top, Gradient Descent and its Types Analytics Vidhya top, Stochastic Gradient Descent In ML Explained How To Implement top, Variance Reduction Methods top, An overview of gradient descent optimization algorithms top, Gradient Descent vs Stochastic GD vs Mini Batch SGD by Ethan top, Stochastic Gradient Descent. Download Scientific Diagram top, Gradient Based Optimizers in Deep Learning Analytics Vidhya top, Stochastic Gradient Descent Clearly Explained top, EfficientDL Mini batch Gradient Descent Explained StatusNeo top, Gradient Descent in Machine Learning Javatpoint top, 1.5. Stochastic Gradient Descent scikit learn 1.4.1 documentation top, Which Optimizer should I use for my ML Project top, 12.4. Stochastic Gradient Descent Dive into Deep Learning 1.0.3 top, Stochastic Weight Averaging in PyTorch PyTorch top, Gradient Descent GD and Stochastic Gradient Descent SGD by top, A Complete Guide to Stochastic Gradient Descent SGD top, ICLR 2019 Fast as Adam Good as SGD New Optimizer Has Both top, deep learning Does Minibatch reduce drawback of SGD Data top, Deep Learning with Differential Privacy DP SGD Explained top, Stochastic Gradient Descent Classifier Machine Learning 2 top, Analysis of preconditioned stochastic gradient descent with non top, SGD with Momentum Explained Papers With Code top, Deep Learning Optimizers. SGD with momentum Adagrad Adadelta top.